AI & Mental Health Therapy

Buzz about the potential uses of artificial intelligence (AI) in the mental health space has exploded since the launch of Open AI’s ChatGPT-3 last fall. Today, ChatGPT has more than 13 million daily users. Its ability to mimic natural human language, write sonnets, screenplays, and even news articles, has captured our imagination and spurned much discussion about its possible uses and pitfalls. Read on to learn more about AI and mental health therapy.

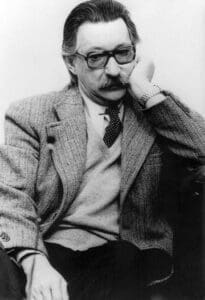

Joseph Weizenbaum (pictured here) is considered one of the “fathers of artificial intelligence.”

ELIZA, the original AI in the mental health therapy room

The human fascination with robots, machines, and artificial intelligence is decades, if not centuries, old. Since at least the 1960s, scientists and technologists have been attempting to build a computer therapist that could mimic the language of a human psychologist. In 1964, M.I.T. computer scientist Joseph Weizenbaum succeeded in creating ELIZA, a natural language program that was capable of engaging in conversation with users through basic mirroring statements and pattern recognition. ELIZA’s popularity quickly took off and, though Weizenbaum eventually became a vociferous critic of the use of artificial intelligence in therapy, excitement about the computer therapist had already spread.

Toda’ys AI mental health bots are far more complex and intelligent than ELIZA. Apps like Woebot and Koko use forms of CBT skill-building to help users cope with anxiety, depression, grief, and stress. In a study published in the Journal of Medical Internet Research, Woebot was shown to decrease symptoms of depression and anxiety in users, compared to a control group.

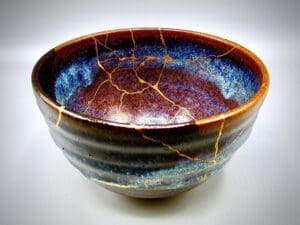

Kintsugi is the Japanese art of repairing broken pottery by mending the areas of breakage with lacquer dusted or mixed with powdered gold, silver, or platinum

Increasing access and ethical issues?

Advocates of AI in mental health cite its capacity to reach more users and eliminate barriers to entry, including cost, lack of healthcare, and distance to treatment services. One in four Americans reports having to choose between mental health care and paying for daily necessities. Rather than drive an hour or more to seek therapy, a client could feasibly open their smartphone and chat with an AI therapist on command.

AI is even being used to detect anxiety and depression in clients by analyzing audio clips from therapy sessions. Kintsugi, a mental health startup, uses machine learning to “detect depression at double the rate of primary care doctors” by looking at subtle changes in a patient’s tone, speech, and language.

However, the use of AI in therapy raises important ethical concerns. Currently, there is no regulation or oversight in developing and using chatbots in mental health. This lack of regulation could pose serious risks to clients’ well-being, safety, and breaches in data. Furthermore, AI is rife with the same implicit biases as its human programmers, and its widespread use could perpetuate and exacerbate existing inequities.

Sam Altman (pictured here) is the CEO of OpenAI which created ChatGBT.

Meet my AI chatbot therapist

So, can chatbots replace therapists altogether? The importance of human connection and relationship-building within the therapeutic dyad is at the heart of many clinical modalities. The therapy room can be a microcosm of our broader lives, and how we relate to another person within the holding space of a therapy session can tell us a lot about our broader relationships. Turning away from face-to-face human connection might be convenient, but it does not benefit our health or well-being. The COVID pandemic demonstrated all too clearly the impact of social isolation and loneliness on our well-being and health.

What does ChatGPT think about all this? When asked if AI Chatbots could replace human therapists, it responded “AI chatbots are not able to replace human therapists in their ability to provide empathetic and nuanced care. While chatbots can be programmed to respond to certain prompts or specific language, they are not able to fully understand the complexity of human emotions, thoughts, and experiences.”

The more we learn about chatbots and the uses of AI, the more it becomes evident that these tools will be incredibly useful in the future of therapeutic intervention. However, it may be just as important to remember that they are not a replacement for interpersonal relationships, human connection, and relational work in therapy. Rather, chatbots and AI should be used as a tool that we might draw upon to supplement clinical work.

Yasmine Beydoun (pictured here) is a graduate of CFR’s Master’s Degree program and will begin seeing clients in June 2023.

About the Author

Yasmine Beydoun is a Master’s of Social Work (MSW) Student Intern in our ISI Program. If you have questions about AI and mental health therapy, you may reach Yasmine via email at ybeydoun@councilforrelationships.org or by phone at 215.382.6680 ext. 7008.

If you have questions about CFR’s ISI Program or about Student Interns, please contact CFR’s Director of Clinical Internships Allen-Michael Lewis, MS, LMFT, AS, at alewis@councilforrelationships.org or by phone at 215.382.6680 ext. 4206.

About CFR’s Professional Education

CFR was one of the first training centers for marriage and family therapy in the country. We currently offer Master’s degree and postgraduate certificate programs as well as continuing education and workshops.